Four criteria characterize practical software development: Speed, maintainability of the product, scalability of both the development team and the software. On the occasion of a digital project at a German retail chain, we conducted a lengthy interview with one of the client’s IT experts. The company develops its specialist apps using microservices wherever possible. We have reproduced key findings from the interview in this article in edited form. The interview partner’s statements are in italics, our notes are in base font. We will be happy to provide the long version on request.

To prepare for the meeting, we presented the interview partner with a catalog of criteria and questions. The IT expert prioritized the content from his point of view. The result surprised us. Of all things, the much-vaunted freedom that agile process models in particular grant app developers only appears at the end of the list. Technical topics such as modularization, rollout and test automation, and monitoring of microservices are at the top of the list.

Only then do organizational aspects such as operational structures and the work culture have an impact. Here, agile process models prove to be advantageous. However, the main driver of project success is not the work culture, but the technical repertoire used to solve a technical problem.

In order to avert a military conflict between Ukraine and Russia, there is a threat to exclude Russia from the global financial communication system SWIFT. The U.S. is in the process of introducing legislation on specific financial sanctions. But why does the U.S. have such a hold on SWIFT in the first place? How hard would this sanction actually hit Russia? And what would it mean for Europe?

As a cyber security and SWIFT expert, Jan Oetting has been a much-cited interview partner in the media on this topic in recent weeks. For example, his expertise was requested by Spiegel, WiWo, Tagesschau, as well as Schweizer Rundfunk and Hessischen Rundfunk.

Here he explains once again the most important background and context in order to understand the extent of the planned sanctions.

Originating as a digital version of the bulletin board, employee or intranet portals today offer centralized, protected, uniformly designed access to internal company information and IT applications. Setting up such a portal is anything but trivial. In addition to selecting the information and apps that are to be made available to employees centrally, it is also necessary to clarify how the portal is to be implemented technically. To help you decide, we present alternative portal construction styles in their historical development here.

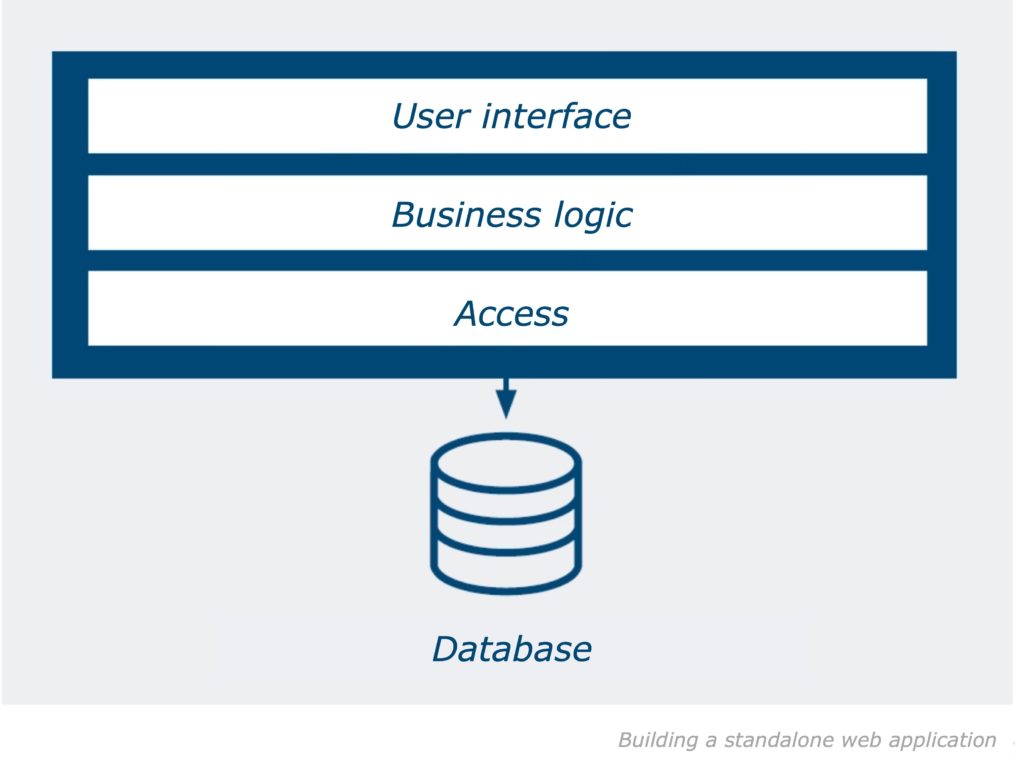

In the early days of the Internet, the web server completely rebuilt the page displayed in the browser after each input. This drove up the network load, led to waiting times, and interaction was bumpy. The data transfer model AJAX (Asynchronous Javascript and XML), developed in the noughties, provided a remedy, which only reloads changed or additionally called page elements from the server. This makes modern web apps behave similarly to local desktop software. With technologies such as AJAX, intranet portals have been developed as stand-alone web applications (single web applications, SWA) without special frameworks. The portal application forms a separate, self-contained environment with its user interface, logic and data access layer:

SWA combines functions and information from administrative and specialist departments in a monolithic architecture. Developers thus have a free hand in the technical implementation and are not subject to any architectural specifications. However, there are a number of disadvantages. For example, a high implementation effort is required, since each function is implemented from scratch. In consultation with the functional managers, department-specific AnwPortal 2.0: Form and Content separation end cases must also be mapped. In addition, the functions are interdependent. This makes development, maintenance and expansion equally costly. If one function is shut down for maintenance, all other functions are also shut down.

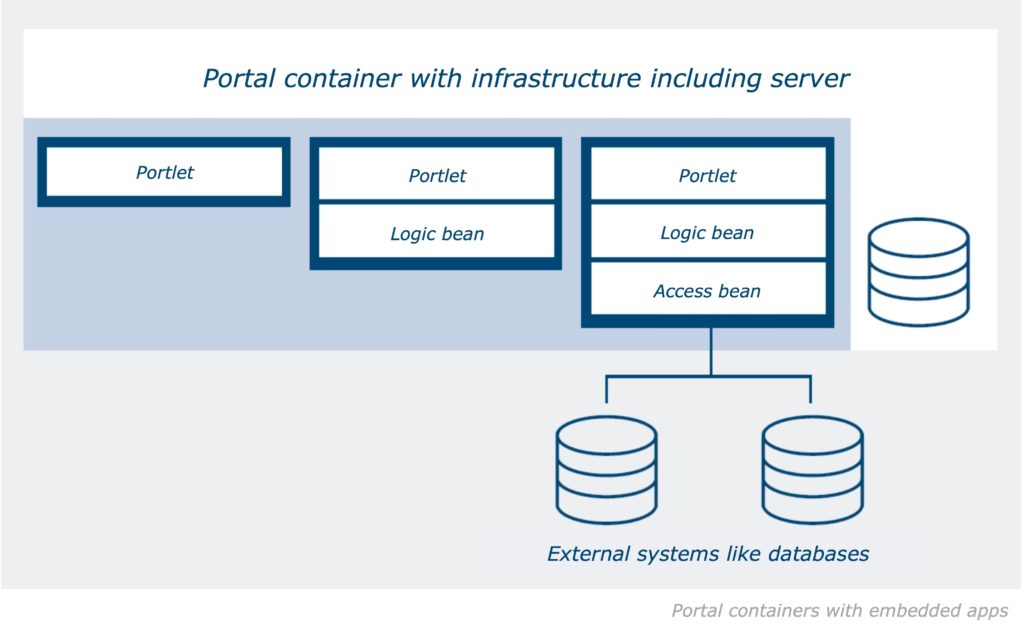

To alleviate these disadvantages, so-called portal containers were developed, large server systems in whose infrastructure the apps for the employees could be implemented. Apart from any external data sources, all apps run on the portal server. The user interface is also calculated entirely on the server side and sent from there to the browser.

The user interface and application or business logic of the apps offered on the portal are developed separately on the software stack of the portal server: User interface elements as reusable portlets, data access and logic components in the form of Javabeans that can also be used multiple times and are integrated via standard interfaces. Large portal servers such as IBM Web-Sphere are usually based on the enterprise edition of the Java platform (JavaEE).

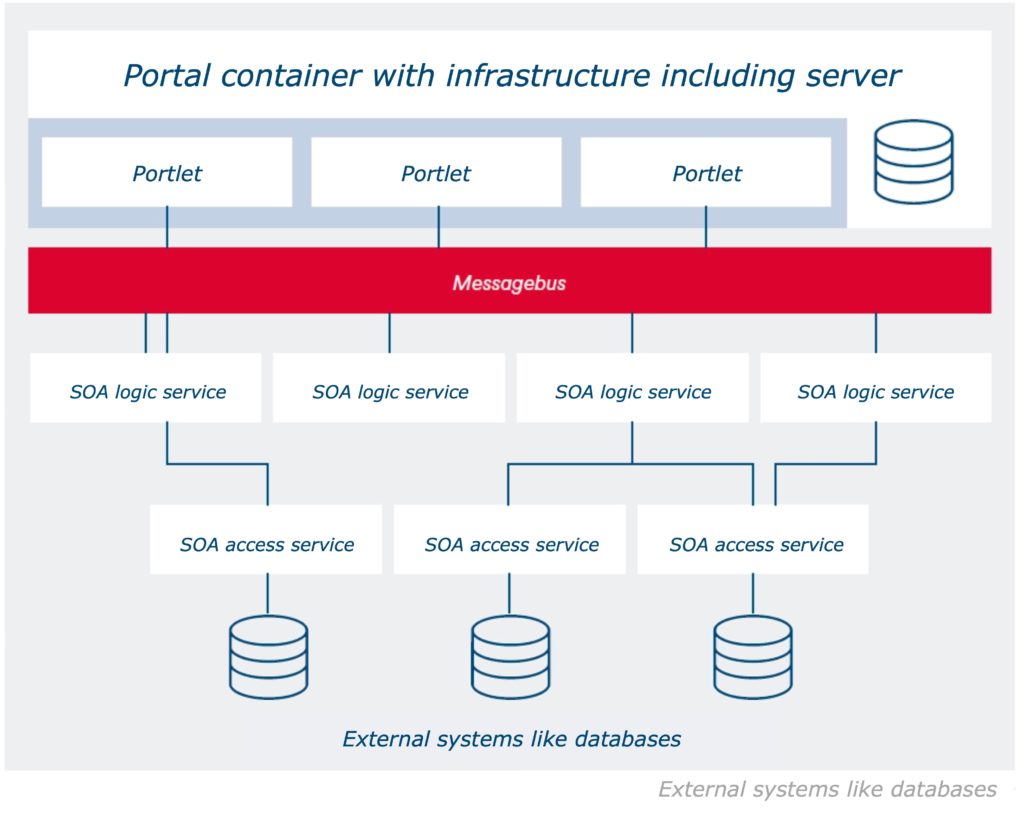

The next evolutionary step of the portal container is the integration of an SOA (service-oriented architecture) layer. Business logic and external data access are no longer implemented in the container, but are outsourced to a service architecture. They are called via a message bus or broker. The portlets only access the business logic via the standardized interface of the bus or broker. This distributes the messages to the components of the business logic or stores them temporarily if a target component cannot be reached.

Compared to a SWA, portal containers have several advantages. The portal server provides an infrastructure with standard functions, including single sign-on and single sign-off at the portal, as well as a roles and rights system. The server also allows for the creation of a unified user interface and dashboard. In the SOA, functions from back-end systems are linked to operational services. These can be scaled by load distribution and decoupled from the individual system to a certain extent. In addition, they are reusable. Multiple apps or more complex services can rely on the same SOA resource. Their abstraction in the SOA layer makes business logic and backend functions platform-neutral. This makes it possible to program SOA services in different languages. Communication with and among the services runs via interfaces.

However, portal containers also have weaknesses. If the container fails, the entire portal goes offline. Fail-safety and scaling can only be achieved by maintaining several instances of the entire server. The modularization of the apps does not protect against forced breaks. Although it facilitates the division of labor among developers, the basic framework of the portal architecture remains monolithic and thus not very maintenance-friendly. In addition, there is a strong dependence on the manufacturer of the portal server (lock-in effect). This applies to (security) updates on the one hand, and to the inevitable commitment to the programming language of the server’s proprietary interfaces on the other. This makes migrating apps to portal servers from other providers risky and extremely costly. For the same reason, existing applications/legacy applications can also only be migrated to the portal container with great effort.

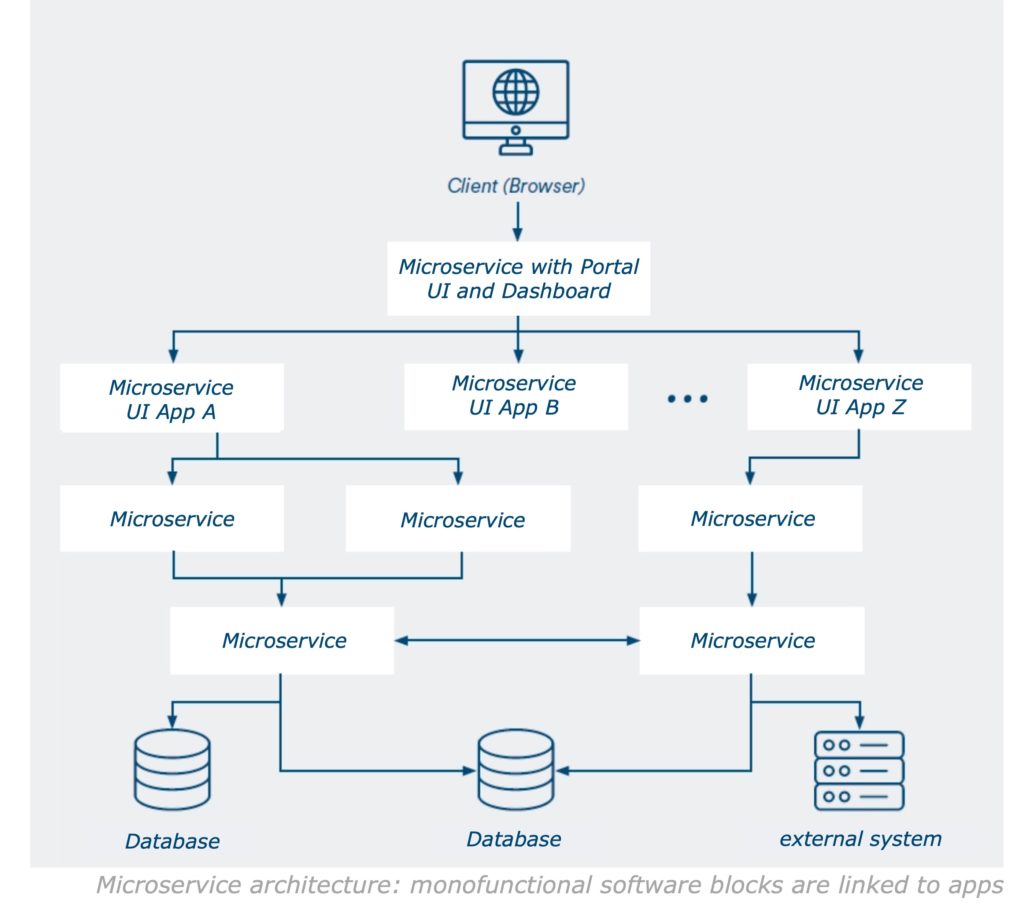

To compensate for the disadvantages of the still quite monolithic portal servers, there is a tendency today towards a lighter architecture based on so-called microservices. A microservice is a self-sufficient piece of software that provides a monofunctional service. By linking any number of microservices, multifunctional apps are created. Once implemented, a service can be used in several apps.

The art of microservice architecture lies in tailoring the services so that the apps built from them do not become too complex. In particular, the function of such a module must not be too narrowly defined. Rather, one service at a time should implement a definable function. The front end of the portal can be rendered either on the server or on the client.

In both cases, the portal, the apps offered there, and the respective interfaces are developed as independent modules. For server-side rendering, web frameworks such as Apache Wicket, JSF or Spring MVC are used. For client-side rendering, ECMAScript-based frameworks such as Angular, React or Vue are used. Rendering on the client leads to a consistently fast response time of the pages.

The strengths of the microservice architecture include its modularity, scalability, ease of maintenance, and resilience: Because microservices are quickly programmed and reusable, they can be used to compose, extend, or modify apps with little effort. This construction style is well suited to an agile process model. The services are scalable independently of each other. Components that are used in several apps or are called up particularly frequently can be provided in more instances than those that are rarely used. And because each microservice represents only one function, it is small enough to be understood, maintained, or replaced by developers. The resilience of the microservice architecture manifests itself as high availability and resilience by distributing instances across multiple servers. The risk of failure is taken into account during implementation. When it occurs, another instance steps in and the app continues to run. Thanks to its encapsulation, it is theoretically possible to program each microservice in a different language. Data exchange among the services runs via standardized, language-neutral interfaces.

Like the older portal models, the micro-service architecture has its downsides. These result from the large number of services. Compared to a portal container, the network load and latency increase because of the communication between the services. In addition, the complexity of each system increases with the number of its components. Even if micro-services form separately usable software modules, it is still necessary to coordinate them functionally and to control their interaction via interfaces, an interdependency that must be taken into account even when formulating the use cases. The intersection of the planned apps in microservices should be selected in such a way that it minimizes the complexity of the overall system.

During testing, live operation and maintenance, software blocks distributed across multiple machines or data centers also cause more effort than a monolith running on a single server.

Among other things, the interaction of the microservices must be tested extensively and the load distributed among the instances according to several parameters. Logging and monitoring is also difficult: Since each microservice logs its own usage, administrators need special tools such as the ELK stack (Elasticsearch, Logstash, Kibana) to get an overall view.

Although the portal architectures presented here (SWA, containers, microservices) can be understood as stages of an evolution, all three are still current. The decision in favor of one of these models requires thorough consideration, especially since intranet offerings are not only becoming larger and more complex, but are also integrating more external web resources. The first thing to clarify is what information and apps should be made available to employees. Then it’s a matter of the technical requirements: What base load and what peak loads can be expected? Which risks of failure do we provide for and how? How far and how fast must the portal solution be able to scale? How well does our IT staff know the relevant models, systems and tools? The answers to such questions should not only be based on the status quo, but also on future needs.

Resource planners allocate personnel to upcoming work in terms of time and location. This is an operational task with a short- to medium-term time horizon. Numerous constraints are incorporated into the shift plan, including:

A deployment plan that takes all these factors into account is highly complex, and the effort required increases with the number of employees and restrictions. In medium-sized to large companies, this task cannot be accomplished with paper and pencil or spreadsheets alone.

The first step is to determine and prioritize the relevant factors within the company. Some restrictions are “hard”, i.e., mandatory like a law, while others are “soft”, i.e., optional like the deployment wishes of the employees. The quantity structures for these constraints require the use of intelligent, ideally thinking IT systems, especially in larger companies. This is where humans reach their limits. However, they should retain control over the plans and always have the last word.

Scoffers see large-scale projects as an example of entropy, the universe’s inherent tendency toward chaos. According to them, such projects break down into six phases: Enthusiasm, disillusionment, panic plus overtime, hunting down the guilty, sanctioning the innocent, praising the uninvolved. The fact that it can be done differently is rarely seen, but it does happen – provided that you call off the hunt for the guilty in favor of productive steps. But let’s take it one step at a time.

Indeed, our project with a retail chain started enthusiastically. The company wanted to introduce a loyalty program, including a customer card, advertising campaign and value-added offers, that stood out from the competition’s programs and tied consumers more closely to the brand. Consileon supported the project from strategic planning and software development to pilot deployment of the loyalty card. But halfway through, the project team’s momentum visibly gave way to disillusionment. The competition was not sleeping, and their bonus programs were unexpectedly growing in membership. The added value of another loyalty card in the wallet could hardly be conveyed. In view of the costs incurred by then, it was only a stone’s throw to phase three: panic!

Instead of hunting down the culprits, however, our client decided on a more constructive fourth phase: a strategic change of course in the form of moving away from developing an individual solution to working with a specialist loyalty program provider. This also opened up the opportunity to expand the loyalty program to additional channels. The multi-channel approach includes the website, online presences, mobile apps, and interactive devices at the store locations. This also put an end to project phases five and six: innocent people were left untroubled, while the uninvolved had to earn their praise elsewhere. Instead, the true success story of the major project now began.

How many mixed rye breads should a bakery chain bake next Monday? Ideally, every customer gets the bread of their choice without a loaf left over. Many factors go into the calculation, including sales the previous week, whether next Monday is a vacation, or whether the bread is currently being advertised. Translating them into input values for a computer program, it can calculate demand in several ways, for example:

The prediction becomes more accurate the more data is included, e.g. also the Monday rye mixed bread sales curve of the last years. The program learns from such data – hence the term machine learning (ML).

Brick-and-mortar retail is showing increasing interest in indoor navigation and the challenges and opportunities it presents. For the customer, it means a more positive shopping experience, while for the operator it represents a unique selling proposition and gives them the opportunity to collect valuable data on shopper behavior.

An indoor positioning system (IPS) is a network of devices that can locate people or things in rooms without GPS reception. Via an IPS connection, navigation devices can also be used in enclosed spaces such as exhibition halls, shopping malls, department stores or supermarkets.

The opportunities that indoor navigation opens up for stationary retail extend far beyond finding an item on the shelf. The cell phone as a helper when working through the shopping list marks only the first stage of a digital retrofit of the sales floor.

IPSs make use of (tri-) lateration: If the position of two points in space is known, the location of a third point can be calculated from this according to the rules of triangular geometry, for example the constantly changing location of a mobile device in a building. Commercial IPSs track this mobile point either with fixed tracking devices (Bluetooth, WLAN, RFID, broadband), referred to here as beacons, or with the aid of optical solutions.

Visible Light Communication (VLC) is one of the optical methods. Instead of using radio beacons, it locates the mobile device via fluorescent lamps or light-emitting diodes (LEDs) in the interior lighting. The location of a light is reported by an individual code contained in the light beam, which is not perceived by the human eye. Other options for optical location determination include scanning QR codes with location information or optical trilateration of prominent navigation points via the camera.

The most common is lateration with low-power Bluetooth beacons (Bluetooth Low Energy, BLE). As with GPS (Global Positioning System), the distance is measured by the propagation time of the tracking signal from the transmitter to the receiver. If enough beacons are distributed in a store, they can be used to track the customer’s path through the product range. Depending on the customer’s behavior or current location, he or she can, for example, be offered a discount via a push message, other interesting items can be suggested, or further advice can be provided.

The hardware of modern smartphones is perfectly sufficient to support different types of IPS. Both Bluetooth lateration and various optical navigation methods can be used. All that is needed is to provide the appropriate software in the form of a user-friendly app.

By recording and evaluating the customer’s navigation through the store, stationary retailers are methodically catching up with online retailers. Since the mid-1990s, operators of commercial websites in particular have been using tracking and analysis tools such as Google Analytics, with which they collect a wide range of key performance indicators (KPIs) on user behavior and evaluate them to optimize interaction. Such tools have been lacking in brick-and-mortar retail until now. The interests and preferences of customers could only be determined at great expense and on a narrow data basis, for example by panel study or observation of individual customers in the store. However, many of the key data collected on the web can be transferred to stationary retail with the appropriate hardware and software.

Our lives are becoming increasingly digital. The exponential growth rates in online retailing and mobile banking are a clear sign that customers are enthusiastic about digital offerings. Now they also expect a high level of digitization, better customer experience, and simpler products from their insurer.

A look at the topic of insurance makes it clear that only those insurance companies that embrace digitization and can thus meet customer needs better than the competition will thrive. Technological progress offers the insurance industry great opportunities to become more efficient and to focus on individual, tailored products. At the same time, the digital transformation creates hurdles in the digitization of insurance offerings and processes.

The world of insurers is changing, with 78% of Germans wanting to interact digitally with their insurer.

As a result, start-ups that can combine their know-how about insurance products with innovative technical solutions are taking a significant place in the insurance world. The number of so-called InsurTechs continues to grow, with global investments in such companies now exceeding USD 10 billion. In addition, multinational technology groups such as Google, Apple, Facebook, Amazon and Microsoft are challenging the insurance industry by becoming providers themselves.

In our whitepaper Digitization in the insurance industry, we answer various questions about the topic. Here is a small selection:

Order the entire whitepaper free of charge here.

The whitepaper is in German.

Between July 12 and 19, 2021, heavy rainfall caused considerable problems in western Germany. The district of Ahrweiler in Rhineland-Palatinate was particularly hard hit.

In the night from July 14 to 15, 2021, the Ahr valley was hit by a flood catastrophe of almost biblical proportions. Towns, villages and entire regions were massively destroyed by the floods. According to current knowledge, a total of 134 people lost their lives in the floods, and two people are still missing.

Immediately after the full extent of the disaster became clear, Consileon‘s management called on all colleagues to announce initiatives from the affected metropolitan area that urgently needed support. Since quite a number of our employees live near the devastated areas, we received a number of suggestions. It was particularly important to managing partner Dr. Joachim Schü that the aid should reach those in need directly and immediately.

After only a short time, the management of the IT and management consultancy decided to help these two initiatives quickly and unbureaucratically with substantial funds.

Since there were so many unbelievably affected people, we decided to donate a large part of the sum to the Bürgerstiftung der Volksbank RheinAhrEifel eG hilft Hochwasseropfern (engl.:Volksbank RheinAhrEifel eG Citizens’ Foundation Helps Flood Victims), since it is best to decide on the spot where funds are most urgently needed. The foundation supports “people and non-profit institutions in the regions affected by the flood disaster”.

And then there was another project close to the heart of our colleague Horst Schwarz, which he had also supported before the flood. IFAK e. V. – Verein für multikulturelle Kinder- und Jungendhilfe – Migrationsarbeit (Association for multicultural child and youth welfare – migration work) and specifically the multi-generation house in the Dahlhausen district of Bochum. Here, the water caused severe damage to both the building and the inventory, especially in the children’s and youth area. After the summer vacations, the aim was to please the children and young people, who were already suffering from the restrictions of the Corona crisis, with new, appealing offers, such as a foosball table and a billiard table. But that was ruined by the floods. Now IFAK had to dispose of the entire facility. Here, too, we support the reconstruction with a donation that could help immediately.

Flood damage at IFAK

We hope that we were able to contribute at least a little bit to alleviate the suffering of the people in the flooded areas.

What is the measure of a software’s success? Shareholders would probably answer: profits, the lead over the competition, test scores. But these are all secondary effects. Their common condition is that the users of the product are convinced by its design and handling. The user experience (UX) must be right. You can find out exactly what is meant by this in this article.