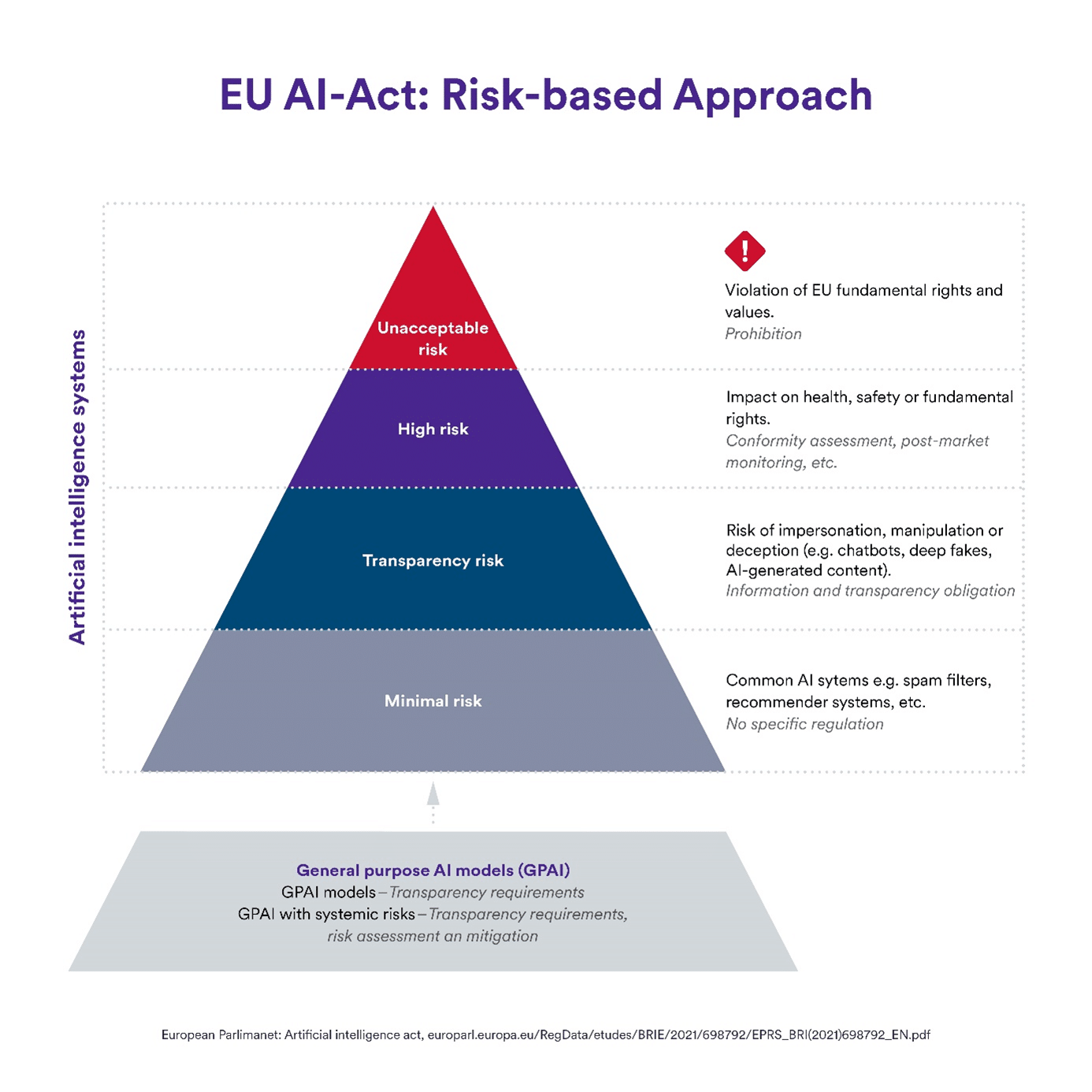

Class 1: Unacceptable risk

The regulation prohibits unreasonably or unacceptably risky AI apps. This includes systems that implicitly violate fundamental rights, for example by exploiting human weaknesses to manipulate behaviour, selecting people according to biometric or social characteristics (social scoring), or monitoring or suspecting people without cause. This also extends to the unauthorised, indiscriminate reading and collection of faces from online images or surveillance cameras as well as the machine interpretation of human emotions in the workplace or in educational institutions. Only official law enforcement officers enjoy – strictly regulated – special powers here.

Class 2: High-risk systems

High-risk AI systems are those whose use does not automatically harm health, safety, fundamental rights, the environment, democracy or the rule of law, but which are susceptible to deliberate or negligent misuse. They are typically used to manage critical infrastructure, material or non-material resources or personnel.

Show more

Such systems will be subject to strict conditions in future. Take lending, for example: according to the AI Act, banks are not allowed to let the machine alone decide on the customer’s creditworthiness. A human must check the score calculated by the machine and be responsible for approving or rejecting the loan.

Manufacturers of such high-risk systems must test them thoroughly before launching them on the market, importers and downstream retailers must ensure that the systems comply with the law, and users must monitor their use. According to the law, the final decision-making and supervisory authority remains the human being. The regulation gives the addressees of the decisions of such systems rights of objection, information and appeal. High-risk systems include in particular

- AI systems as components of products subject to the EU Product Safety Regulation

- AI systems from one of the following eight categories:

- Recognition and classification based on biometric features

- Operation of critical infrastructure

- Education and vocational training

- Labour, personnel management, access to self-employment

- Basic provision of public or private services

- Law enforcement

- Migration, asylum, border control

- Legal advice

Class 3: Transparency risk

The EU legislator categorises AI as moderately risky, or at least non-transparent, if it does not conflict with fundamental rights but leaves users in the dark about the nature and sources of the service. This applies to chatbots, but above all to so-called generative AI, i.e. programmes that generate artificial texts, images or videos (e.g. deepfakes). According to the law, such apps must identify themselves as machines, label their products as artefacts, document training data and its sources, protect the copyrights of the sources and prevent the generation of illegal content.

Class 4: Low risk

No restrictions apply to simple AI systems such as spam filters or recommendation services.